Greetings from Read Max HQ! In this week’s newsletter, a consideration of the current state of A.I. discourse.

Some housekeeping: This week I appeared on Vox’s “Today, Explained” podcast with Noel King to talk about the Zizians. Also, Jamelle Bouie recently posted video of my appearance talking about Air Force One on the “Unclear and Present Danger” podcast with him and John Ganz.

A reminder: Read Max, and its intermittently thoughtful and intelligent coverage of tech, politics, and culture, depends on paying subscribers to survive. I’m able to make one newsletter free every week thanks to the small percentage of total readers who support the work. If you like this newsletter--if you find it entertaining, educational, informative, or at least “not enervating”--consider becoming a paid subscriber, and helping subsidize the freeloaders who might find some small benefit themselves. At $5/month, it’ll only cost you about a beer every four weeks, or ten beers a year.

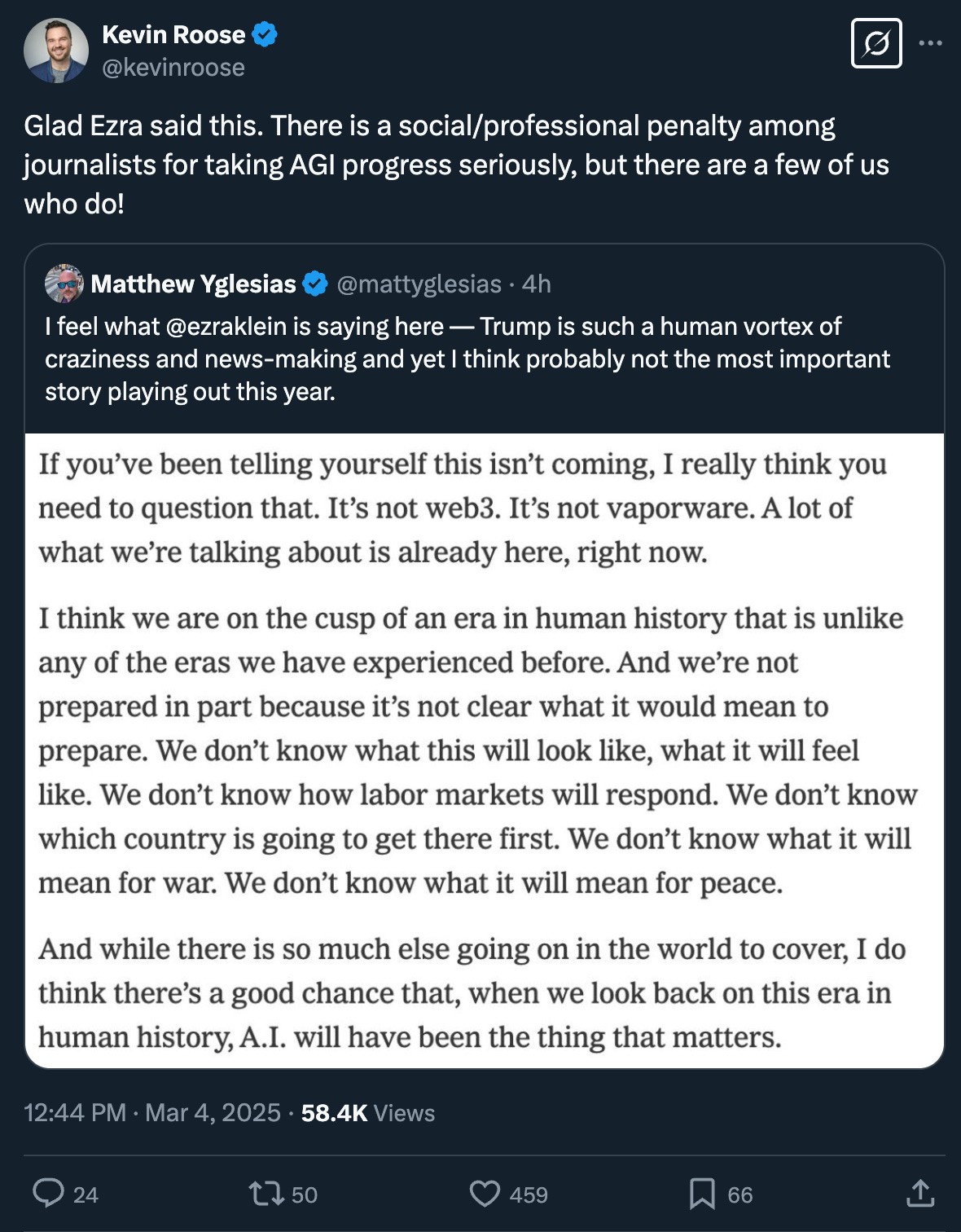

This tweet from Times reporter Kevin Roose (quote-tweeting Matt Yglesias, who’s screen-shotting an Ezra Klein column) crossed my desk on Tuesday:

I don’t mean to pick on Roose, but the sentiments expressed here--both in the tweet and the quoted paragraphs--strike me as a good examples of a new development in endless and accursed Online A.I. Discourse: The backlash to the A.I. backlash.

Since the release of ChatGPT in 2022, A.I. discourse has gone through at least two distinct cycles, at least in terms of how it’s been talked about and understood on social media, and, to a lesser extent, in the popular press. First came the hype cycle, which lasted through most of 2023, during which the loudest voices were prophesying near-term chaos and global societal transformation in the face of unstoppable artificial intelligence, and Twitter was dominated by LinkedIn-style A.I. hustle-preneur morons claiming that “AI is going to nuke the bottom third of performers in jobs done on computers — even creative ones — in the next 24 months.”

When the much-hyped total economic transformation failed to arrive in the shortest of the promised timeframes--and when too many of the highly visible, actually existing A.I. implementations turned out to be worse-than-useless dogshit--a backlash cycle emerged, and the overwhelming A.I. hype on social media was matched by a strong anti-A.I. sentiment. For many people, A.I. became symbolic of a wayward and over-powerful tech industry, and many people who admitted or encouraged the use of A.I., especially in creative fields, was subject to intense criticism.

But that backlash cycle is now facing the early stages of a backlash of its own. Last December, the prominent tech columnist (and co-host, with Roose, of the Hard Fork podcast) Casey Newton wrote a piece called “The phony comforts of AI skepticism,” suggesting that many A.I. critics and skeptics were willfully ignoring the advancing power and importance of A.I. systems:

there is an enormous disconnect between external critics of AI, who post about it on social networks and in their newsletters, and internal critics of AI — people who work on it directly, either for companies like OpenAI or Anthropic or researchers who study it. […]

There is a… rarely stated conclusion… which goes something like: Therefore, superintelligence is unlikely to arrive any time soon, if ever. LLMs are a Silicon Valley folly like so many others, and will soon go the way of NFTs and DAOs. […]

This is the ongoing blind spot of the “AI is fake and sucks” crowd. This is the problem with telling people over and over again that it’s all a big bubble about to pop. They’re staring at the floor of AI’s current abilities, while each day the actual practitioners are successfully raising the ceiling.

In January, Nate Silver wrote a somewhat similar post, “It's time to come to grips with AI,” which more specifically takes “the left” to task for its A.I. skepticism:

The problem is that the left (as opposed to the technocratic center) isn’t holding up its end of the bargain when it comes to AI. It is totally out to lunch on the issue.

For the real leaders of the left, the issue simply isn’t on the radar. Bernie Sanders has only tweeted about “AI” once in passing, and AOC’s concerns have been limited to one tweet about “deepfakes.”

Meanwhile, the vibe from lefty public intellectuals has been smug dismissiveness.

And, this week, Klein’s interview with Ben Buchanan, Biden’s special adviser for artificial intelligence--which arrives with the headline “The Government Knows A.G.I. Is Coming.” Klein’s not as direct as Newton or Silver, but he’s obviously aiming his introduction to the interview at what Newton calls “the ‘A.I. is fake and sucks’ crowd”:

If you’ve been telling yourself this isn’t coming, I really think you need to question that. It’s not web3. It’s not vaporware. A lot of what we’re talking about is already here, right now.

I think we are on the cusp of an era in human history that is unlike any of the eras we have experienced before. And we’re not prepared in part because it’s not clear what it would mean to prepare. We don’t know what this will look like, what it will feel like. We don’t know how labor markets will respond. We don’t know which country is going to get there first. We don’t know what it will mean for war. We don’t know what it will mean for peace.

And while there is so much else going on in the world to cover, I do think there’s a good chance that, when we look back on this era in human history, A.I. will have been the thing that matters.

The substance of the anti-backlash position at its broadest is something like: Actually, A.I. is quite powerful and useful, and even if you hate that, lots of money and resources are being expended on it, so it’s important to take it seriously rather than dismissing it out of hand.

Who, precisely, these columns are responding to is an open question. The objects of accusation are somewhat vague: Newton mentions Gary Marcus, the cognitive scientist and prolific blogger, but then acknowledges that Marcus “doesn’t say that AI is fake and sucks, exactly.” Silver seems to be responding to two tweets from Noah Kulwin and Ken Klippenstein. Klein doesn’t specify anyone at all. The ripostes are not so much about the many rigorous A.I.-critical voices that have emerged--taxonomized in this Benjamin Riley post, which serves as an excellent guide to some of the sharpest and smartest people currently writing on the subject--and more about an ambient, dismissive anti-A.I. sensibility that’s emerged on social media, and that animates, e.g., the spiteful banter that leads Roose to say he suffers a “social penalty for taking AGI progress seriously.”

But at the same time I also don’t think that this backlash-to-the-backlash is limited to Big Accounts complaining about their Twitter mentions, either. Speaking anecdotally, I see more pushback than I used to against some of the more vehement A.I. critics, and defenses of A.I. usage from people who are otherwise quite critical of the tech industry. I wouldn’t say we’re in a new hype cycle--yet--but it’s clear that the discursive ground has shifted slightly in favor of A.I.

Why is the attitude changing? Some proponents of a new hype cycle broadly if vaguely invoke vibes and rumors and words from sources, as though OpenAI is just a few model-weight tweaks away from releasing HAL-9000 (Klein, in his column this week: “Person after person… has been coming to me saying… We’re about to get to artificial general intelligence”; Roose, a few months ago: “it is hard to impress… how much the vibe has shifted here… twice this month I’ve been asked at a party, ‘are you feeling the AGI?’”). The problem with using “A.I. insiders” as a guide to A.I. progress is that these insiders have been whispering stuff like this for years, and at some point I think we need to admit that even the smartest people in the industry don’t have much credibility when it comes to timelines.

But it’s not all whispers at parties. There are public developments that I think help explain some of the renewed enthusiasm for A.I., and pushback against aggressive skepticism. Take, for example, the new vogue for “Deep Research” and visible chain-of-thought models. OpenAI, Google, and XAI all now have products that create authoritative “reports” based on internet searches, and whose process can be made legible to the user as a step-by-step “chain of thought.” This format can be as confidence-inspiring in its own way as the “human-like chatbot” format of the original ChatGPT was. As Arvind Narayanan puts it: “We're seeing the same form-versus-function confusion with Deep Research now that we saw in the early days of chatbots. Back then people were wowed by chatbots' conversational abilities and mimicry of linguistic fluency, and hence underappreciated the limitations. Now people are wowed by the ‘report’ format of Deep Research outputs and its mimicry of authoritativeness, and underappreciate the difficulty of fact-checking 10-page papers.”

This length and stylistic confidence makes it easy to convince yourself that LLMs are still improving by leaps and bounds, even as evidence gathers that progress on improving capability is slowing. Klein even mentions Deep Research in his interview: “I asked Deep Research to do this report on the tensions between the Madisonian Constitutional system and the highly polarized nationalized parties we now have. And what it produced in a matter of minutes was at least the median of what any of the teams I’ve worked with on this could produce within days.”1 But since the debut of ChatGPT it’s been clear that the format and “character” in which an LLM generates text has an enormous effect on how users understand and trust the output. As Simon Willison and Benedict Evans--two bloggers who are not kneejerk A.I. critics--have both pointed out, for all of its strengths, Deep Research has the same ineradicable flaws as its LLM-app predecessors, masked by its authoritative tone. “It's absolutely worth spending time exploring,” Willison writes, “but be careful not to fall for its surface-level charm.”

But if Deep Research is providing some hope for forward momentum, I think a broader--if somewhat less specific or sexy--development has softened the ground a bit for a renewed A.I. hype: the straightforward and observable facts that generative A.I. output has gotten much more reliable since 2022, and more people have found ways to incorporate A.I. into their work in ways that seem useful to them. The latest models are more dependable than the GPT 3-era models that were many people’s first interaction with LLM chatbots--and, crucially, many of them are able to provide citations and sources that allow you to double-check the work. I don’t want to overstate the trustworthiness of any text produced by these apps, but it’s no longer necessarily the case that, say, asking an LLM a question of fact is strictly worse than Googling it, and it’s much easier to double-check the answers and understand its sourcing than it was just a year ago.

These fairly obvious improvements go hand in hand with a larger number of people who don’t have a particular ideological or financial commitment to A.I. who’ve found ways to integrate it into their work, coming up with more clearly productive uses for the models than the useless, nefarious or obviously bogus suggestions posed by the A.I. influencers who annoying dominated the first hype wave. I don’t use A.I. much for writing or research (old habits die hard), but I’ve found it extremely useful for creating and cleaning up audio transcriptions, or for finding tip-of-my-tongue words and phrases. (It’s possible that all these people, myself including, are fooling themselves about the amount of time they’re saving, or about the actual quality of the work being produced--but what matters in the question of hype and backlash is whether people feel as though the A.I. is useful.)

None of which is to say, of course, that A.I. is universally useful, harmless, or appropriate. Aggressive A.I. integration into existing products like Google Search and Apple notifications over the last couple years has mostly been a highly public, who-asked-for-this? dud, and probably the most widespread single use for ChatGPT has been cheating on homework. But it’s much harder to make the case that A.I. products are categorically useless and damaging when so many people seem able to use them to adequately supplement tasks like writing code, doing research, or translating or proofing texts, with no apparent harm done.

And even if it undermines more aggressive claims about the systems’ uselessness or fraudulence, I tend to think that more widespread consumer adoption of A.I. tools is, on balance, a good development for A.I. skepticism. In my own capacity as an A.I. skeptic I’m desperate for A.I. to be demystified, and shed of its worrying reputation as a one-size-fits-all solution to problems that range from technical to societal. I think--I hope, at any rate--that widespread use may help accomplish that demystification: The more people use A.I. with some regularity, the more broad familiarity they’ll develop with its specific and and consistent shortcomings; the more people understand how LLMs work from practical experience, the more they can recognize A.I. as an impressive but flawed technology, rather than as some inevitable and unchallengeable godhead.

In many ways I’m sympathetic to the backlash-to-the-backlash. I often find myself annoyed when I see smug wholesale dismissiveness of A.I. systems as a whole on Bluesky. As a general rule, I think it serves critics well to be curious and open when it comes to the object of criticism. But where I draw the line on A.I. openness, personally, is “artificial general intelligence.”

I don’t like this phrase, and I wish journalists would stop using it. I’m not sure that it’s well-known enough outside of the world of people following this stuff that “A.G.I.” doesn’t mean anything specific. It tends to be casually thrown around as though it refers to a widely understood technical benchmark, but there’s no universal test or widely accepted, non-tautological definition. (“A canonical definition of A.G.I.,” Klein’s interview subject Buchanan says, “is a system capable of doing almost any cognitive task a human can do.” 🆗.) Nor, I think we should be clear, could there be: Unfortunately for the haters, “intelligence”--or, now, “general intelligence”--is not an empirical quality or a threshold that be achieved but a socio-cultural concept whose definition and capaciousness emerges at any given point in time from complex and overlapping scientific, cultural, and political processes.

None of which, of course, has stopped its wide adoption to mean some--really any--kind of important A.I. achievement. In practice it’s used to refer to dozens of distinct scenarios from “apocalyptic science-fiction singularity” to “particularly powerful new LLM” to “hypothetical future point of wholesale labor-market transformation.” This collapse tends to obfuscate more than it clarifies: When you say “A.G.I. is coming soon,” do you mean a we’re about to flip the switch and birth a super-intelligence? Or do you mean that computer is going to do email jobs? Or do you just mean that pretty soon A.I. companies will stop losing money?

I’m not even joking about that, by the way--among the only verifiable definitions of “A.G.I.” out there is a contractual one between Microsoft and OpenAI, currently bound in a close partnership legally breakable upon development of A.G.I. According to The Information, the agreement between the companies declares that OpenAI will have achieved A.G.I.

only when OpenAI has developed systems that have the “capability” to generate the maximum total profits to which its earliest investors, including Microsoft, are entitled, according to documents OpenAI distributed to investors. Those profits total about $100 billion, the documents showed.

The documents still leave some things open to interpretation. They say that the “declaration of sufficient AGI” is in the “reasonable discretion” of the board of OpenAI. And the companies may still have differing views on whether the existing technology has a massive, profit-generating capability.

There is something very funny and Silicon Valley about A.G.I.’s meaning having shifted from a groovy “rapturous technosingularity” to a lawyerly “$100b in profits,” but it’s also worth noting that at least one person might benefit in quite a direct and literal way from the increasingly broad and casual use of “A.G.I.,” in the press as well as at Silicon Valley parties: Sam Altman.

But I think what really gets to me about the overuse of “A.G.I.” is not so much the vagueness or the fact that Sam Altman might profit from it, but the teleology it imposes--the way its use compresses the already and always ongoing process of A.I. progress, development, and disruption into a single point of inflection. Instead of treating A.I. like a normal technological development whose emergence and effect is conditioned by the systems and structures already in place, we’re left anxiously awaiting a kind of eschatological product announcement--a deadline before which all we can do is urgently and at all costs prepare, a messianic event after which we will no longer be in control. This kind of urgency and anxiety serves no one well, except for the people who’ve found a way to profit from cultivating it.

It’s an off-the-cuff interview and I wouldn’t want to make to much of it, but I thought it was interesting that Klein’s immediate illustrative example didn’t involve a way that A.I. might replace him, but a way it might replace people who work for him. Whatever else you can say about this technology, it has a way of making people think like bosses.

I'm not on Bluesky or anything so I don't have perspective on some of the blanket AI dismissals, but I am a tech worker and am very much on the Luddite side of things with respect to LLMs. The Luddite view is frequently mischaracterized as "AI is fake and it sucks" and that's not right at all. But this characterization serves a purpose insofar as it allows the Casey Newtons of the world to sidestep the actual objections entirely. These objections range from the obvious, that the technology only works reliably in very limited domains or where accuracy & reliability aren't that important, or the more subtle, that whatever productivity gains from AI might occur will be be fully captured by the capitalist class.

Klein's interview with Buchanan is telling. Klein pushed him pretty hard about why no one on Buchanan's watch really seemed to plan for the social disruption that would occur should some sort of AGI emerge soon. I think the answer to this is obvious: neither Buchanan nor the people he worked with really believe that a world-changing AI will actually happen. But it never hurts to make a bold prediction: fully self-driving cars in 2016. No, wait, 2019.... Now....who knows.

Instead of fully self-driving cars, we got Teslas covered in cameras constantly running in "sentry mode", surveilling everyone and everything. *This* is the Luddite objection. Hype, promises, massive over-investment, extra surveillance, cars unsafe for pedestrians, and massive asset price inflation for the benefit of the few.

There is also some conceptual slippage going on: when I, a Luddite, say AI is fake, I refer to the AI industry, whose upside-down economics are premised the magical thinking that LLMs will lead to AGI, loosely defined. Or according to another view, the AI industry is essentially a fossil fuels & data center political economy play where the actual models are sort of secondary.

It's self-evident that there is real technology there and it can be passing useful for people in some ways. I used it the other day to help me figure out what to wear for an event and to brainstorm about a project I am working on. Handy! I couldn't imagine paying actual money for these conveniences, and less still for the ability to "vibecode" a recipe generator based on an image of my fridge. The lack of a killer consumer app, the lack of corporate uptake of OpenAI integration apart from some big consulting shops, and the complete commodification of LLMs in general make the industry pretty fake even if people can get a bit of mileage out of LLMs in their everyday lives.

I'm not even overly frugal by nature; I pay for your content!

I've sort of adopted an AI mantra of sorts to smooth out the high and lows of, as a technically-minded person, being interested in and every once in a great while aided by LLM type things, and horrified/frustrated at the centrality of the discussion, the costs, the claims - 'it is interesting that computers can do that now!'.

Because, well, it is! There are things like making new text that is like old text in interesting ways but different in interesting ways, is interesting! It was also interesting when computers were bad at chess and then were good at chess, and when pictures made on computers in movies went from looking bad and niche to looking pretty good (and then often bad again). It was interesting the first time a computer accepted a voice command (in the '60s) and a robot car drove across the country (in the '80s) and the first time someone had weird itchy feelings about a chatbot (in the '70s). It was interesting when you could push buttons and math answers came out! Computers are interesting! Sometimes they are useful!

But also, I dunno, *they're just computers*. The world filling up with computers has not radically bent its economic trajectory from the Before Times because they mostly do dumb things, and like anything they can mostly do things that don't take a lot of new figuring and waiting for happy accidents, and maybe they do them a little better (or actually do them a little worse because they're being driven to market by enormous piles of capital that can Do Things), and there are profound incentives for the bored pile of money, with its chip foundries so expensive they need to be kept in operation like Cold War shipyards, and the piles of data the wise were saying they probably shouldn't be collecting even if the reasons seemed thin, and the simple fact of being excited and blinkered by your work, to say that it's All Over- whatever that means. The fact that 'it is interesting that computers can do that now' places them firmly in a pantheon of things that have often been not super useful, or bounded, or premature, or misunderstood, or in the end, not that interesting.

Like, the chatbots are making fewer mistakes and seeming more like a search *because they're doing search.* Summoning up a surface summary on Madison et al is interesting, but also, it can do that *because Wikipedia is sitting right there* with ten page surface summaries.

The killer app for LLMs is text transformation, full stop. That's really neat! It's neat that my mess of poorly formatted notes cut and pasted from ten places are now formatted. It's neat that something can pull the topic sentences of ten papers and put them in one paper instead of looking at them all in different places on the front page of a search. But also these things in some ways don't seem that surprising if you said 'I took all the public facing documents in the world (and a few we stole because those are so bad) and had a computer look at them a billion times.'