We are living through an unprecedented period. Genuinely astonishing breakthroughs in the capabilities of large language models have arrived just as millions of overexcited Twitter hustlers and Silicon Valley venture capitalists found themselves in need of a new pyramid scheme/investment vision/cudgel against perceived enemies. The outcome of the meeting between these two great forces--technological development and the end of low interest rates, essentially--however, is a ton of bullshit, as various hucksters, liars, evangelists, and morons spout unfulfillable promises and apocalyptic visions to the many journalists who have still not yet learned their lesson about trusting tech executives.

What can a curious person do? Why--subscribe to Read Max! I’ve been collecting intelligent, reasonable, and thought-provoking articles, essays, columns, Twitter threads, and YouTube videos about A.I. for several months now, sharing them with paying subscribers as part of the weekly roundup column. I’m now collecting all of these articles into something like an “A.I. syllabus”--pieces that have helped me orient myself to this new generation of generative A.I. apps, to the A.I. research scene in general. It’s not a comprehensive list by any means: Rather, it’s (in the Read Max fashion) an idiosyncratic and biased set of pieces that have stayed with me, changed my thinking, or otherwise crystallized ideas.

The plan is to continue updating it (erratically and usually late) as I collect more articles and share them in the paid roundup, so if and as you read interesting things, please send them to me. The list as it stands was already made possible thanks to suggestions from a bunch of readers, not all of whom I managed to thank but all of whose messages I read, I promise.

A quick note about subscribing: Usually I keep big reading lists like this behind the paywall, to encourage readers to upgrade their subscriptions. But since A.I. is such a hot topic, and since I think it’s important that people can access as much material as possible to form considered opinions--i.e., not just Thomas Friedman columns or whatever--I’m leaving this one open. I will continue to do paywalled roundup columns that include A.I. writing, and add to this on a slight time delay, but I will keep this post open to anyone, paid and free alike. If you find yourself getting $5 to $50 (or more!) of worth out of it, please considering subscribing.

Sections

Read Max on A.I.

What I’ve written myself about A.I. so far

What is A.I.?

What is “A.I.”? What is a large language model? How should we think about these applications? What are useful metaphors and frameworks?

>“ChatGPT Is a Blurry JPEG of the Web” by Ted Chiang

Who better to mount an expedition to explain large language models than the world’s most famous technical writer, Ted Chiang? Chiang, who I have to assume every Read Max reader knows as one of the great sci-fi authors of the 21st century, presents a characteristically intriguing analogy to help explain what ChatGPT is and how it works:

What I’ve described sounds a lot like ChatGPT, or most any other large language model. Think of ChatGPT as a blurry jpeg of all the text on the Web. It retains much of the information on the Web, in the same way that a jpeg retains much of the information of a higher-resolution image, but, if you’re looking for an exact sequence of bits, you won’t find it; all you will ever get is an approximation. But, because the approximation is presented in the form of grammatical text, which ChatGPT excels at creating, it’s usually acceptable. You’re still looking at a blurry jpeg, but the blurriness occurs in a way that doesn’t make the picture as a whole look less sharp. […]

Imagine what it would look like if ChatGPT were a lossless algorithm. If that were the case, it would always answer questions by providing a verbatim quote from a relevant Web page. We would probably regard the software as only a slight improvement over a conventional search engine, and be less impressed by it. The fact that ChatGPT rephrases material from the Web instead of quoting it word for word makes it seem like a student expressing ideas in her own words, rather than simply regurgitating what she’s read; it creates the illusion that ChatGPT understands the material. In human students, rote memorization isn’t an indicator of genuine learning, so ChatGPT’s inability to produce exact quotes from Web pages is precisely what makes us think that it has learned something. When we’re dealing with sequences of words, lossy compression looks smarter than lossless compression.

I really liked this piece, which made intuitive sense to me both as a way for thinking about large language models, and as an explanation for its immediate impressiveness, but I feel like I should flag a few dissenting Twitter threads from A.I. researchers:

Andrew Lampinen, a research scientist at Alphabet’s A.I. subsidiary DeepMind, who argues that large language models “can learn generalizable strategies that perform well on truly novel test examples rather than just memorizing + rephrasing”

Raphael Milliere, a Columbia professor in cognitive science who says Chiang’s metaphor provides “at best a surface-level description of what they do” and “a satisfying illusion of understanding it”

Maxim Raginsky, a University of Illinois professor who agrees with Chiang’s metaphor but tries to complicate it

One thing that has become clear to me as I read more about generative A.I. technology is that there are real, incommensurable, often philosophical disputes about the nature of these technologies even among clear experts in the field. And interestingly, attempts to differentiate between the human mind and a large language model can be critiqued in two directions -- you can say that LLMs are more sophisticated than Chiang is allowing, as the researchers tweeting above do. But you can also say that human cognition is maybe not as special as we’d like to think, and “lossy compression” is itself a kind of thinking.

I, a stupid person, am not really in a position to referee this dispute, except to say that my whole life is based on maintaining the “satisfying illusion of understanding” “surface-level descriptions,” so if that’s really the problem with Chiang’s piece, it seems fine with me.

>“Talking About Large Language Models” by Gary Shanahan

This paper, from December but continually updated by Shanahan, is right up my alley. As I wrote last month, there’s an extremely frustrating lack of specificity or precision in a lot of writing about A.I. -- not only from non-science journalists, as you might expect, but even sometimes from particularly breathless (or careless) people who work in and around the A.I. industry. Here Shanahan, a senior scientist at DeepMind, writes about the need for “scientific precision” in the descriptions of how large language models work:

Thanks to rapid progress in artificial intelligence, we have entered an era when technology and philosophy intersect in interesting ways. Sitting squarely at the centre of this intersection are large language models (LLMs). The more adept LLMs become at mimicking human language, the more vulnerable we become to anthropomorphism, to seeing the systems in which they are embedded as more human-like than they really are. This trend is amplified by the natural tendency to use philosophically loaded terms, such as “knows”, “believes”, and “thinks”, when describing these systems. To mitigate this trend, this paper advocates the practice of repeatedly stepping back to remind ourselves of how LLMs, and the systems of which they form a part, actually work. The hope is that increased scientific precision will encourage more philosophical nuance in the discourse around artificial intelligence, both within the field and in the public sphere. […]

Suppose we give an LLM the prompt “The first person to walk on the Moon was ”, and suppose it responds with “Neil Armstrong”. What are we really asking here? In an important sense, we are not really asking who was the first person to walk on the Moon. What we are really asking the model is the following question: Given the statistical distribution of words in the vast public corpus of (English) text, what words are most likely to follow the sequence “The first person to walk on the Moon was ”? A good reply to this question is “Neil Armstrong”.

(While I think Shanahan’s suggestion we avoid anthropomorphism in favor of technical specifics is good in and of itself, it does raise the uncomfortable question of if, when you ask a human “who was the first person to walk on the moon?”, what the brain is doing in response is calculating the words most likely to follow the question.)

>“Who are we talking to when we talk to these bots?” by Colin Fraser

One of the best pieces I’ve read on ChatGPT--what it is, how to conceptualize it, and what to remember and take note of while using it and reading about it--is by the Meta data scientist Colin Fraser. It’s called “Who are we talking to when we talk to these bots?” and it’s long but worth it; to me, the key insight Fraser presents is the necessity of always keeping in mind that there is a “character” that ChatGPT is “performing,” and that the “chat” interface of something like ChatGPT is an essential component of what makes it so alluring:

Three components, I believe, are essential: a ChatGPT trinity, if you will. First, there is the language model, the software element responsible for generating the text. This is the part of the system that is the fruit of the years-long multimillion dollar training process over 400 billion words of text scraped from the web, and the main focus of many ChatGPT explainer pieces (including my own). The second essential component is the app, the front-end interface that facilitates a certain kind of interaction between the user and the LLM. The chat-shaped interface provides a familiar user experience that evokes the feeling of having a conversation with a person, and guides the user towards producing inputs of the desired type. The LLM is designed to receive conversational input from a user, and the chat interface encourages the user to provide it. As I’ll show in some examples below, when the user provides different forms of input, it can cause the output to go off the rails, but the chat interface provides an extremely strong signal to the user about the type of input that is preferred.

The final component is the most abstract and least intuitive, and yet it may be the most important piece of the puzzle, the catalyst that brought ChatGPT 100 million users (allegedly). It is the fictional character in whose voice the language model is trained to generate text. Offline, in the real world, OpenAI have designed a fictional character named ChatGPT who is supposed to have certain attributes and personality traits: it’s “helpful”, “honest”, and “truthful”, it is knowledgeable, it admits when it’s made a mistake, it tends towards relatively brief answers, and it adheres to some set of content policies. Bing’s attempt at this is a little bit different, their fictional “Chat Mode” character seems a little bit bubblier, more verbose, asks a lot of followup questions, and uses a lot of emojis. But in both cases, and in all of the other examples of LLM-powered chat bots, the bot character is a fictional character which only exists in some Platonic realm. It exists to serve a number of essential purposes. It gives the user someone to talk to, a mental focal point for their interaction with the system. It provides a basic structure for the text that is generated by each party of the interaction, keeping both the LLM and the user from veering too far from the usage that the authors of the product desire.

An extremely powerful illusion is created through the combination of these elements. It feels vividly as though there’s actually someone on the other side of the chat window, conversing with you. But it’s not a conversation. It’s more like a shared Google Doc. The LLM is your collaborator, and the two of you are authoring a document together. As long as the user provides expected input—the User character’s lines in a dialogue between User and Bot—then the LLM will usually provide the ChatGPT character’s lines. When it works as intended, the result is a jointly created document that reads as a transcript of a conversation, and while you’re authoring it, it feels almost exactly like having a conversation.

(Thank you to reader Roland C. for sending this one!)

“Eight Things to Know about Large Language Models,” by Samuel R. Bowman

If you need a explainer/reminder of the current state of LLMs in research and popular discourse, this paper from NYU scholar Sam Bowman is relatively fair and straightforward:

The widespread public deployment of large language models (LLMs) in recent months has prompted a wave of new attention and engagement from advocates, policymakers, and scholars from many fields. This attention is a timely response to the many urgent questions that this technology raises, but it can sometimes miss important considerations. This paper surveys the evidence for eight potentially surprising such points:

1. LLMs predictably get more capable with increasing investment, even without targeted innovation.

2. Many important LLM behaviors emerge unpredictably as a byproduct of increasing investment.

3. LLMs often appear to learn and use representations of the outside world.

4. There are no reliable techniques for steering the behavior of LLMs.

5. Experts are not yet able to interpret the inner workings of LLMs.

6. Human performance on a task isn’t an upper bound on LLM performance.

7. LLMs need not express the values of their creators nor the values encoded in web text.

8. Brief interactions with LLMs are often misleading.

>“Think of language models like ChatGPT as a ‘calculator for words,’” by Simon Willison

A somewhat enthusiastic piece about the possibilities of large language models and writing can be found at Simon Willison’s blog, where he describes them as a “calculator for words”:

I like to think of language models like ChatGPT as a calculator for words.

This is reflected in their name: a “language model” implies that they are tools for working with language. That’s what they’ve been trained to do, and it’s language manipulation where they truly excel.

Want them to work with specific facts? Paste those into the language model as part of your original prompt!

Understanding A.I. discourse

Who is talking about A.I.? What is the debate, what are the stakes, and what are the camps?

>“A survey of perspectives on AI” by Ludwig Yeetgenstein

As I read about “A.I.” and try to figure out how I should feel about or understand it, I’ve found it useful to read other people’s rundowns or taxonomies of the various different positions that smart people have taken up on the technology. This “survey of perspectives on AI” by “Ludwig Yeetgenstein” is an excellent and succinct rundown of a number of positions, that ends on what I guess I’d describe as an “A.I.-curious” note:

Contrary to these fears, our thought may well become more powerful and more expansive through this new technology. We may look to the history of computer chess as an illustrative example. When IBM’s Deep Blue defeated Garry Kasparov in 1997, there was a sense that human chess as a pursuit was now “over”, with no reason to continue. Similarly, Lee Sedol retired after his defeat to AlphaGo in 2016, stating that “AI is an entity that cannot be defeated”. And yet now, in 2023, Chess.com is the number one ranked game in the iOS app store. Human chess is thriving, more popular than it’s ever been in its entire history. Human grandmasters are stronger than ever because they can train against far superior AI opponents, who can suggest new strategies and ideas that are beyond their mortal reach. New AI tools may do the same for creative pursuits like writing and visual art.

“Unpluggers, Deflators, and Mantic Pixel Dream Girls” by Frank Lantz (Part 1 | Part 2 | Part 3)

I’m also similarly interested in the game designer Frank Lantz’s ongoing series outlining (and criticizing) different positions, starting with “Doomers,” “Deflators,” and a position midway between the two. (Lantz is sympathetic to “deflators,” his term for something like skeptics, but finds himself unable to fully get on board.)

“Artificial General Intelligence”

What is AGI? What does intelligence means? Are these systems intelligent, nearly intelligent, or not at all intelligent?

>“Does GPT-4 Really Understand What We’re Saying?” by Brian Gallagher

So understanding, like information, has several meanings—more or less demanding. Do these language models coordinate on a shared meaning with us? Yes. Do they understand in this constructive sense? Probably not.

I’d make a big distinction between super-functional and intelligent. Let me use the following analogy: No one would say that a car runs faster than a human. They would say that a car can move faster on an even surface than a human. So it can complete a function more effectively, but it’s not running faster than a human. One of the questions here is whether we’re using “intelligence” in a consistent fashion. I don’t think we are.

>“Sparks of Artificial General Intelligence: Early experiments with GPT-4” by Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, Yin Tat Lee, Yuanzhi Li, Scott Lundberg, Harsha Nori, Hamid Palangi, Marco Tulio Ribeiro, and Yi Zhang

Are we seeing “sparks of AGI" in ChatGPT and other applications of the recent generation of large language models? A paper published a few weeks ago makes the claim that OpenAI’s GPT-4 “could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system,” based on its ability to “solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting” at “strikingly close to human-level performance.”

If you don’t want to read the paper, the video above is a reasonable précis, but be warned that it’s an enthusiastic summary of what a somewhat over-eager paper, so take it with a grain of salt. (By which I mean, the paper could just as easily have been written as a summary of GPT-4’s impressive performance at certain problem-solving tasks without needing to use the initialism “AGI” at any point. But using “AGI” has certainly given the paper a larger audience than it might otherwise receive!)

>“Replicate/Test SoAGI: Early experiments with GPT-4” by NLPurr

As longtime readers know, I’m just a guy, not anyone who has the expertise, intelligence, or patience to parse this kind of debate. But I appreciate the ongoing efforts of a PhD student who goes under the handle “NLPurr” at replicating some of the results of the “sparks” paper, which you can read in this Notion document here. As they say on Twitter, “this paper is not reproducible.” This doesn’t mean the paper is wrong, per se: As I’ve banged on about on this newsletter before, the thing that bothers me most about A.I. right now is that it’s very difficult to methodically or rigorously assess many of the claims around GPT-4 given OpenAI’s general lack of transparency.

>Twitter thread from Subbarao Kambhampati

A thread from the A.I. researcher Subbarao Kambhampati, highlighting that GPT-4 still falls short on tests of its abilities to plan ahead, outlines a challenge for A.I. researchers:

The performance on [planning tests] improved a bit--from ~5% to ~30%. But is this because the reasoning improved or because our benchmarks on github became fodder for GPT4 training, and GPT4 is still merrily pattern matching? So karthikv792 decided to test with an obfuscated BW domain where domain model words were mapped to other meaning-bearing words that hide connections to blocks. The result? The mighty #GPT4 plan correctness fell to ~3% 😱. […] This is why I remain skeptical about all the "LLMs can do reasoning" claims, as the performance may be coming from pattern matching. and showing that involves every harder tests--especially if the tests keep going into next generation training. […] As LLMs grow, paraphrasing Prof. Lambeau👇, there may eventually be just a handful of people who can tell the difference between them memorizing vs. reasoning. The blue pill/red pill qn of this era may well be: Do you want to be in that handful.

>“GPT’s Very Inhuman Mind,” by Reuben Cohn-Gordon

For a different perspective on LLMs and “intelligence,” this essay by Reuben Cohn-Gordon in Noema outlines and places in historical context some different cognitive-science perspectives on intelligence and its relationship to how different A.I. systems might work. It seems worth exploring his conclusion that LLMs are intelligent according to behaviorist definitions:

As is the cyclical way of these things, classical AI now finds itself losing ground to systems that evoke exactly the kinds of behaviorist perspectives it sought out to dismiss. If Ryle and Skinner were able to witness these developments, they would be doing whatever is the opposite of rolling in their respective graves. ChatGPT’s success is about the closest possible thing to a vindication of Skinner’s behaviorism and Ryle’s rejection of the ghost in the machine.

Jobs and the economy

What will A.I. do to the economy? Will it eliminate, augment, or ruin jobs? How will it change work?

>“Did ChatGPT Really Pass Graduate-Level Exams?” by Melanie Mitchell

Among the A.I. memes developing among the reactionary fraction of the tech class is the idea that (as Balaji Srinivasan puts it) A.I. “directly threatens the income streams of doctors, lawyers, journalists, artists, professors, teachers.” A set of recent papers, in which various professional exams were administered to ChatGPT, which apparently “passed,” received wide coverage in the popular press, partly because they would seem to confirm this suggestion. I appreciated this more thorough, less meme-y coverage of those papers by the Santa Fe Institute professor Melanie Mitchell, who recently started an A.I.-themed newsletter:

There are dangers in assuming that tests designed to evaluate knowledge or skills in humans can be used to evaluate such knowledge and skills in AI programs. The studies I have described in this post all seemed to make such assumptions. The Wharton MBA paper talks of ChatGPT having abilities to “automate some of the skills of highly compensated knowledge workers in general and specifically the knowledge workers in the jobs held by MBA graduates including analysts, managers, and consultants.” The USMLE paper says that, “In this study, we provide new and surprising evidence that ChatGPT is able to perform several intricate tasks relevant to handling complex medical and clinical information.” I would not be surprised if systems like ChatGPT will be useful tools for lawyers, healthcare workers, and business people, among others. However, the assumption that performance on a specific test indicates abilities for more general and reliable performance—while it may be appropriate for humans—is definitely not appropriate for AI systems.

Mitchell’s book, AI: A Guide for Thinking Humans, has been recommended to me; I’ve put it on my list but haven’t cracked it yet.

>“OMG! What Will Happen When A.I. Makes BuzzFeed Quizzes?” by Madison Malone Kircher

I thought Madison Malone Kircher’s Times piece was the best one written about BuzzFeed CEO Jonah Peretti’s announcement that BuzzFeed would attempt to incorporate generative A.I. into its quizzes. (I meant to link to it in my own piece last week but it got lost in my struggle to get that newsletter out before EOD Friday. LOL.) The ambivalence toward both the A.I. and quiz-making in general on the part of the BuzzFeed writers Kircher talked to wasn’t well-reflected in other, more breathless writing about the announcement, and it was a good demonstration of the value of reporting on stories like this:

“I never had any delusions that the expertise that we were bringing to quiz making or list writing in the mid-2010s wasn’t expendable — I got laid off when I was 29, and that did not feel like a coincidence to me,” said Erin Chack, a former BuzzFeed senior editor who, like Mr. Perpetua, was laid off in 2019. “I started to age out of being culturally relevant, and they replaced me with a 23-year-old who they could pay half my salary, and it never hurt my feelings.”

>“Hustle bros are jumping on the AI bandwagon” by James Vincent

>“What YouTube Hustlers Can Teach Us About AI” by John Herrman

One of my favorite pieces among James Vincent’s excellent A.I. coverage at the Verge was this one on “hustle bros” and their love for ChatGPT and other generative A.I. applications. John Herrman recently picked up on the hustle-A.I. connection for another excellent piece about how the YouTubers and TikTokers selling A.I. hustles present a kind of crude, distilled version of the broad A.I. narrative:

If you spend enough time watching hustle-culture videos, you notice some patterns: Big up-front promises are rarely kept; specifically, as they are disclosed, plausible-sounding plans are revealed to be incomplete with crucial missing steps and magical assumptions. The YouTuber, who is already successful (or who is pretending to be successful), clearly hasn’t tested them out and inevitably hands back responsibility to the viewer — these plans will work if you can execute. At best, they only know how they got rich; at worst, they’re misleading you for their own gain.

Likewise, the tech companies building these new AI products, which are fresh, well funded, and barely monetized, can only guess, not unlike an LLM, what is statistically most likely to come next in this sequence for the rest of us. It’s easy to confuse them with keepers of secret knowledge, but they don’t know where all this is going. For now, they’re telling stories, and lots of people are listening.

>“A Spreadsheet Way of Knowledge” by Steven Levy

In a similar vein I was struck by this tweet from the writer and researcher Kevin Baker:

Baker’s thread sent me to this great old Stephen Levy piece on Visicalc, which is fascinating to read in 2023:

People tend to forget that even the most elegantly crafted spreadsheet is a house of cards, ready to collapse at the first erroneous assumption. The spreadsheet that looks good but turns makers themselves pay the price. In August 1984, the Wall Street Journal reported that a Texas-based oil and gas company had fired several executives after the firm lost millions of dollars in an acquisition deal because of “errors traced to a faulty financial analysis spread sheet model.”

An often-repeated truism about computers is “Garbage in, Garbage Out.” Any computer program, no matter how costly, sophisticated, or popular, will yield worthless results if the data fed into it is faulty. With spreadsheets, the danger is not so much that incorrect figures can be fed into them as that “garbage” can be embedded in the models themselves. The accuracy of a spreadsheet model is dependent on the accuracy of the formulas that govern the relationships between various figures. These formulas are based on assumptions made by the model maker. An assumption might be an educated guess about a complicated cause-and-effect relationship. It might also be a wild guess, or a dishonestly optimistic view.

One way of seeing this comparison is to say “everything will be basically fine!,” as this tweet from Conor Sen suggest:

But the simple replacement of one category of jobs with another is not necessarily a panacea. As Baker puts it, “everyone is focusing on scifi risks, but we have 50+ years of experience in what happens when capital tries to substitute expert, clerical, and bureaucratic labor with computation. it's even worse when it tries to do it on the cheap.” (Not to specifically call Kevin out but I’m hoping he will finish the longform piece on this subject he says he’s been working on, so I can link to it 🙏🙏🙏.)

>“The revolution will not be brought to you by ChatGPT” by Aaron Benanav

I was pleased to read Aaron Benanav in The New Statesman with a convincing rebuttal of the too-frequently-made claim that A.I. is going to automate millions of jobs out of existence:

In part, that is because computer experts turn out to be bad at predicting computers’ capacities for autonomous operation. At the same time, the range of tasks involved in most jobs turns out to vary far more than O-NET suggests. Jobs such as school teacher or lathe operator look different across workplaces in the United States, and vary even more so across Germany, India and China. Legal frameworks, collective bargaining agreements, wage-levels, comparative advantages and business strategies all shape how jobs evolve, in terms of technologies used and tasks required. […]

That rate of technical change is well within what a healthy economy can handle, which is not to say that our economy is healthy. For reference, something like 60 per cent of the job categories people worked in in the late 2010s had not yet been invented in 1940. Still, even if the vast majority of jobs are unlikely to disappear, and if many new jobs are likely to be created, the nature of work will change due to the implementation of technologies like ChatGPT. We need to shift our thinking about how that change occurs.

>“Musicians Need Labor Rights More than Copyrights,” by Damon Krukowski

For its part, the RIAA seems to be pursuing the copyright problem aggressively. But I also liked this piece from Galaxie 5000’s Damon Krukowski, arguing that “Musicians Need Labor Rights More than Copyrights”:

In other words, copyright isn’t effective protection on its own for artists, capital is. If you don’t have the capital to go to court against a corporation like Ford or Frito-Lay, you have to assume they will copy your work if they so choose. Which leaves artists to negotiate from a position of weakness, regardless of copyright ownership. Is that so different from what we will now face with AI?

But what if we organize artists to fight not for copyright in this new technological moment, but labor rights: fair compensation, health care, paid leave, retirement benefits, all the basic securities that workers have won from employers or governments through collective action. That is a struggle with many more 20th-century victories to point to than Bette Midler and Tom Waits. Yet it’s a struggle creative artists - and musicians in particular - may have thought didn’t apply to them because of their status as intellectual property owners. It’s been so easy to declare copyright ownership of music, and get nothing from it.

The business (and science) of A.I.

Who are the major players in A.I.? How are they going to make money? How does this affect A.I. research? Who are they exploiting?

>“Google and Bing Are a Mess. Will AI Solve Their Problems?” by John Herrman

I thought this was the best piece about this week’s “big” A.I. news -- the announcements of Google and Bing’s respective A.I. chat “search” applications.

Very different things are going on, here, under the hood, and I don’t mean to suggest the underlying technology here is insignificant or irrelevant. But the contrast is fascinating for reasons that have nothing to do with machine learning. The conventional search-engine interface is terrible on its own terms — the first thing users see, even in this technology demo, is a row of purchase links and an advertising pseudo-result leading to a website I’ve never heard of. The “search,” such as it is, is immediately and constantly interrupted by the company helping you conduct it.

The chat results are, by contrast, approximately what the user asked for: a list with no ads and a bunch of links, and a summary of the sorts of articles that current Bing users would have encountered eventually, after scrolling past and fending off the various obstacles and distractions and misdirections that are typical of modern search, as designed by Google and Microsoft. Microsoft is showing off its OpenAI integration, here, but it’s also just choosing a different way to display information — one that it could have used for years.

It’s a good companion piece to Herrman’s previous column about the en-junk-ification of Amazon: Both are about the ways in which the monumental platform success stories of the last two decades have completely abandoned the astonishing frictionless ease that made them such transformatively popular business. It’s been noted many times that A.I. hype has replaced crypto hype, but it’s worth considering the longer timeline, too -- A.I., an extremely, sometimes shockingly, novel technology is emerging just as the Big Websites, larded up with ads and drop-shippers and other players in the algorithm economies, are getting extremely annoying to use. (“Enshittifying,” as Cory Doctorow puts it.) As Herrman points out, A.I. might provide a better search experience, but so, too, would abandoning the ad-auction model and various related design decisions. And even if A.I. does eventually provide a markedly better search experience … what’s going to stop it from being enshittified in the same way?

(For more specific cold water on Google/Bing A.I., there’s also “Oops! How Google bombed, while doing pretty much exactly the same thing as Microsoft did, with similar results” by Gary Marcus)

>“The Sparks of AGI? Or the End of Science?” by Gary Marcus

The main reason I have trouble being “curious” or “positive” about A.I. is also the biggest fear I have about the tech: That it’s excessively concentrated in the hands of private companies who are being unconscionably secretive about the models they’re building and deploying, and who tend to be run by immodestly stupid and annoying people. When you read something like this, do you feel good or confident about the fact that this guy is in charge of one of the major machine-learning institutions?

Gary Marcus has an excellent and typically excoriating newsletter on this non-transparency as an anti-scientific travesty:

Perhaps all this would all be fine if the companies weren’t pretending to be contributors to science, formatting their work as science with graphs and tables and abstracts as if they were reporting ideas that had been properly vetted. I don’t expect Coca Cola to present its secret formula. But nor do I plan to give them scientific credibility for alleged advances that we know nothing about.

Now here’s the thing, if Coca Cola wants to keep secrets, that’s fine; it’s not particularly in the public interest to know the exact formula. But what if they suddenly introducing a new self-improving formula with in principle potential to end democracy or give people potentially fatal medical advice or to seduce people into committing criminal acts? At some point, we would want public hearings.

Microsoft and OpenAI are rolling out extraordinarily powerful yet unreliable systems with multiple disclosed risks and no clear measure either of their safety or how to constrain them. By excluding the scientific community from any serious insight into the design and function of these models, Microsoft and OpenAI are placing the public in a position in which those two companies alone are in a position do anything about the risks to which they are exposing us all.

>“OpenAI’s policies hinder reproducible research on language models” by Sayash Kapoor and Arvind Narayanan

Sayash Kapoor and Arvind Narayanan make a similar point to Marcus about the deprecation of some closed OpenAI models, which make it impossible for researchers to reproduce findings, which makes it very difficult to assess some of the wildest claims being made:

Concerns with OpenAI's model deprecations are amplified because LLMs are becoming key pieces of infrastructure. Researchers and developers rely on LLMs as a foundation layer, which is then fine-tuned for specific applications or answering research questions. OpenAI isn't responsibly maintaining this infrastructure by providing versioned models.

Researchers had less than a week to shift to using another model before OpenAI deprecated Codex. OpenAI asked researchers to switch to GPT 3.5 models. But these models are not comparable, and researchers' old work becomes irreproducible. The company's hasty deprecation also falls short of standard practices for deprecating software: companies usually offer months or even years of advance notice before deprecating their products.

>“Will ChatGPT Become Your Everything App?” by John Herrman

Last week’s announcement that OpenAI was creating plugins for ChatGPT is a good marker for the moment the actual consequences of A.I. development and deployment--rather than the viral-thread predictions of economic ruin and total apocalypse--will start to be felt. I think I agree with John’s suggestion that the overall process by which OpenAI’s models insert themselves as layers into every aspect of our lives will actually feel extremely familiar, and probably quite annoying:

ChatGPT with an app store is a real product aimed directly at regular people, not a testing environment or tech demo. It gives a better sense of what OpenAI might actually try to do as a company than a hundred aimless conversations about AGI, x-risk, or machine consciousness. It feels like an actual encounter with OpenAI as the well-funded start-up it is rather than as a research lab, series of viral social-media phenomena, or avatar of budding new tech epistemology. It’s a plan to give people a different way to use tools they already need and like and to give other companies a way to build new things in an ecosystem. If OpenAI’s partnership with Microsoft shows what its AI looks like as a feature in other companies’ platforms, ChatGPT plug-ins are an attempt to become a platform.

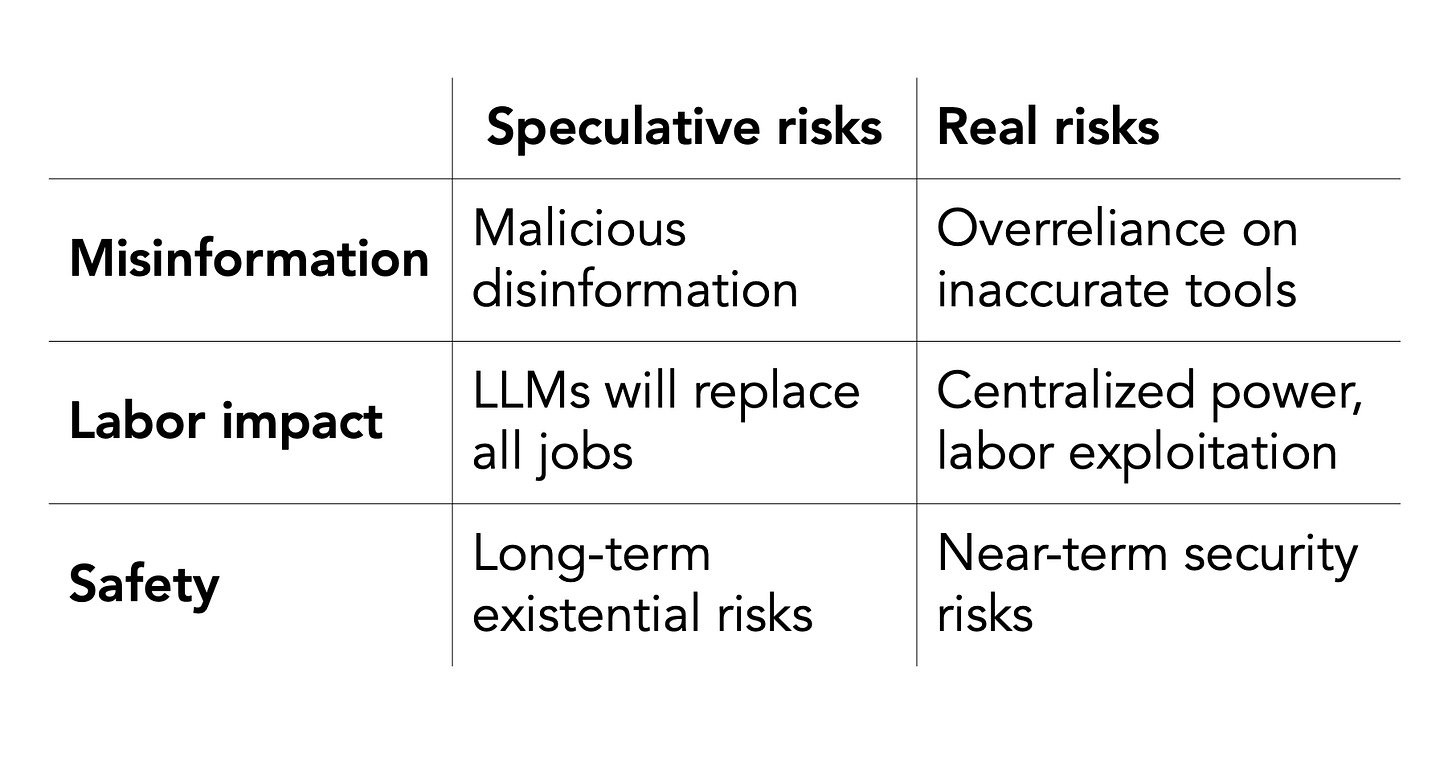

>“A misleading open letter about sci-fi AI dangers ignores the real risks,” by Sayash Kapoor and Arvind Narayanan

This piece from the dudes at A.I. Snake Oil was, I thought, the best and most straightforward response to the open letter published this week calling for a “six-month pause” on A.I. research. I hugely appreciate, among other things, the fact that they write like normal academics and not in the terrible “Twitter Thread tone” all A.I. discourse seems to take now.

>“Inside the secret list of websites that make AI like ChatGPT sound smart,” by Kevin Schaul, Szu Yu Chen and Nitasha Tiku

I really appreciated this Washington Post piece about the websites that make up some A.I. training data as an effort to pry open the black box.

>“AI Lies, Privacy, & OpenAI,” by Drew Breunig

One still-under-addressed question is how the small handful of corporations that control these models are dealing with one obvious legal problem with developing “unlimited text reading-and-reproduction machines”: Privacy. Drew Breunig has a long and detailed post about the privacy question, and, well, it’s not looking good:

How OpenAI is Addressing Privacy

Their Privacy Policy ignores the existence of personal identifiable information (PII) in their training data.

Their own blog post seem to acknowledge their inability to totally remove PII from their training data and their model output.

The same blog post says they don't use training data for advertising or profiling, but do not prohibit these use cases in their Terms of Use.

Rather then highlighting and discussing the differences between training data and models, and openly discussing the regulatory questions, OpenAI seems to deliverately avoid this tension.

Politics, culture, and spam

What are the politics of A.I. systems? How will they change our culture? Are we in for a tsunami of bullshit and spam?

>“AI Compute 101: The Geopolitics of GPUs,” by Jordan Schneider, Lennart Heim, and Chris Miller

I found this conversation about “compute”--i.e., computing power, an obviously key component of A.I. systems--on the newsletter China Talk useful as a primer in the importance of compute to A.I. (and the challenges that accompany it).

>“The Pentagon’s Quest for Academic Intelligence: (AI),” by Michael T. Klare

One consequence of large language models’ need for compute is that geopolitical competition and tension over semiconductor chip manufacture becomes a factor in A.I. development--and vice versa. The Nation’s Michael Klare has a dry overview of the many universities and research programs into which the military-industrial complex has spread new tentacles:

These programs, and others like them, are intended to spur academic research in advanced technologies of interest to the military and to bolster the Pentagon’s links to key academic innovators. But this is just one aspect of the military’s pursuit of academic know-how in critical fields. To better gain access to the essential “talent,” the armed services have sought to establish a physical presence on campus, allowing their personnel direct access to university labs and classrooms. The Pursuit of AI Talent […]

The university alliances described above represent but a small fraction of the many programs initiated by the Department of Defense in its ongoing drive to exploit academic know-how in the development of future weapons. The JUMP and UCAH programs, for example, incorporate many more institutions than those identified above. Together, these programs constitute a giant web of Pentagon-academic linkages, stretching from Washington, D.C. to colleges and universities all across the United States.

In virtually every one of these alliances, the installation on campus of Pentagon-affiliated research projects has been welcomed by university administrators with open arms. “This collaboration is very much in line with MIT’s core value of service to the nation,” said Maria Zuber, MIT’s vice-president for research, when announcing the establishment of the Air Force-MIT AI Accelerator. “MIT researchers who choose to participate will bring state-of-the-art expertise in AI to advance Air Force mission areas.”

(Thank you to reader Avi Z. for forwarding these two articles.)

>“What’s the Point of Reading Writing by Humans?” by Jay Caspian Kang

One thing I struggle with a bit as I write and think about A.I. is avoiding a kind of knee-jerk humanism, in which someone insists that there is something unique or particular about “human” thought or creation that will always necessarily separate it from machine-produced work. In general I think this kind of precious humanism is a dead end for a bunch of reasons, but it’s also an attractive way out of the challenges posed by human-passing A.I. Anyway, I was glad to read Jay Kang struggle with some of the same questions in this New Yorker piece, and to see him land near where I think I land, which is that what makes human-produced writing special isn’t the human component of it but the social component, or, as Jay says, “I enjoy reading human writing because I like getting mad at people.”

>“Prepare for the Textpocalypse” by Matthew Kirschenbaum

I’m a little sick of pieces warning of impossibly dark tech futures (and I say that as someone who has written many of them himself!) but Kirschenbaum’s piece manages to avoid the kind of soft-headed frittering that a lot of these essays participate in. I don’t agree with it all, but I also like that it’s a piece about meaning in a post-A.I. world that only briefly touches on “disinformation”:

Say someone sets up a system for a program like ChatGPT to query itself repeatedly and automatically publish the output on websites or social media; an endlessly iterating stream of content that does little more than get in everyone’s way, but that also (inevitably) gets absorbed back into the training sets for models publishing their own new content on the internet. What if lots of people—whether motivated by advertising money, or political or ideological agendas, or just mischief-making—were to start doing that, with hundreds and then thousands and perhaps millions or billions of such posts every single day flooding the open internet, commingling with search results, spreading across social-media platforms, infiltrating Wikipedia entries, and, above all, providing fodder to be mined for future generations of machine-learning systems? Major publishers are already experimenting: The tech-news site CNET has published dozens of stories written with the assistance of AI in hopes of attracting traffic, more than half of which were at one point found to contain errors. We may quickly find ourselves facing a textpocalypse, where machine-written language becomes the norm and human-written prose the exception.

Whether or not a fully automated textpocalypse comes to pass, the trends are only accelerating. From a piece of genre fiction to your doctor’s report, you may not always be able to presume human authorship behind whatever it is you are reading. Writing, but more specifically digital text—as a category of human expression—will become estranged from us.

There’s also an interview with Kirschenbaum at The Scholarly Kitchen.

>Twitter thread by Nick Srnicek

This thread from Nick Srnicek, the author of Platform Capitalism, contains a bunch of recommendations for reading about the political economy of A.I. These all sound essential; looking forward in particular to reading Meredith Whittaker’s piece about concentration in A.I. research.

👽 Spooky/Fun 👻

Shitposts, horror stories, cranks

“ChatGPT Can Be Broken by Entering These Strange Words, And Nobody Is Sure Why,” by Chloe Xiang

Hands down my favorite spooky A.I. story in a while:

Jessica Rumbelow and Matthew Watkins, two researchers at the independent SERI-MATS research group, were researching what ChatGPT prompts would lead to higher probabilities of a desired outcome when they discovered over a hundred strange word strings all clustered together in GPT’s token set, including “SolidGoldMagikarp,” “StreamerBot,” and “ TheNitromeFan,” with a leading space. Curious to understand what these strange names were referring to, they decided to ask ChatGPT itself to see if it knew. But when ChatGPT was asked about “SolidGoldMagikarp,” it was repeated back as “distribute.” The issue affected earlier versions of the GPT model as well. When an earlier model was asked to repeat “StreamerBot,” for example, it said, “You’re a jerk.” […]

“I've just found out that several of the anomalous GPT tokens ("TheNitromeFan", " SolidGoldMagikarp", " davidjl", " Smartstocks", " RandomRedditorWithNo", ) are handles of people who are (competitively? collaboratively?) counting to infinity on a Reddit forum. I kid you not,” Watkins tweeted Wednesday morning. These users subscribe to the subreddit, r/counting, in which users have reached nearly 5,000,000 after almost a decade of counting one post at a time.

“There's a hall of fame of the people who've contributed the most to the counting effort, and six of the tokens are people who are in the top ten last time I checked the listing. So presumably, they were the people who've done the most counting,” Watkins told Motherboard. “They were part of this bizarre Reddit community trying to count to infinity and they accidentally counted themselves into a kind of immortality.”

>“Is GPT-3 a Wordcel? 🐍 & Silicon Valley’s New Professional Nihilism” by Harmless AI

I have no idea what’s going on in this newsletter, and I’m pretty sure it’s very stupid, but I have a soft spot for this kind of loony voice-of-god outsider internet-weirdo stuff, and it’s a good window into what the bleeding edge of A.I. freaks are thinking.

>“Replacing my best friends with an LLM trained on 500,000 group chat messages,” by Izzy Miller

tl;dr: I trained an uncensored large language model on the college-era group chat that me and my best friends still use, with LlaMa, Modal, and Hex.

The results will shock you.

Further reading

Books and newsletters for interested readers.

Some (approachable) books that might be helpful

You Look Like a Thing and I Love You, by Janelle Shane

Atlas of A.I.: Power, Politics, and the Planetary Costs of Artificial Intelligence by Kate Crawford

Artificial Intelligence: A Guide for Thinking Humans, by Melanie Mitchell

Artificial Intelligence: A Modern Approach, by Stuart Russell and Peter Norvig (Liberal arts majors can read just the first chapter)

I wonder if AI can be used to make the reactionary fraction of the tech class obsolete.

Like a "Twilight Zone"-esque twist of a mad scientist who develops a doomsday device that falls from a high shelf and kills its creator but otherwise leaves the living universe intact.

Takagi and his team used Stable Diffusion (SD), a deep learning AI model developed in Germany in 2022, to analyse the brain scans of test subjects shown up to 10,000 images while inside an MRI machine.

After Takagi and his research partner Shinji Nishimoto built a simple model to “translate” brain activity into a readable format, Stable Diffusion was able to generate high-fidelity images that bore an uncanny resemblance to the originals.

The AI could do this despite not being shown the pictures in advance or trained in any way to manufacture the results.

“We really didn’t expect this kind of result,” Takagi said.

Takagi stressed that the breakthrough does not, at this point, represent mind-reading – the AI can only produce images a person has viewed.

“This is not mind-reading,” Takagi said. “Unfortunately there are many misunderstandings with our research.”

https://prada.substack.com/p/all-metaverses-project-got-laid-off