You may have recently seen some viral examples of text generated by GPT-3, a “natural language processing system” that draws on a huge database to complete prompts. GPT-3 is among the most complex and advanced language models ever created; with only a minimal prompt it can “write” text so fluent and appropriate it’s often indistinguishable from that written by a human.

For example, here’s someone on Twitter using it to make a fake transcript from the Nixon tapes in which Nixon and Haldeman discuss eating ass:

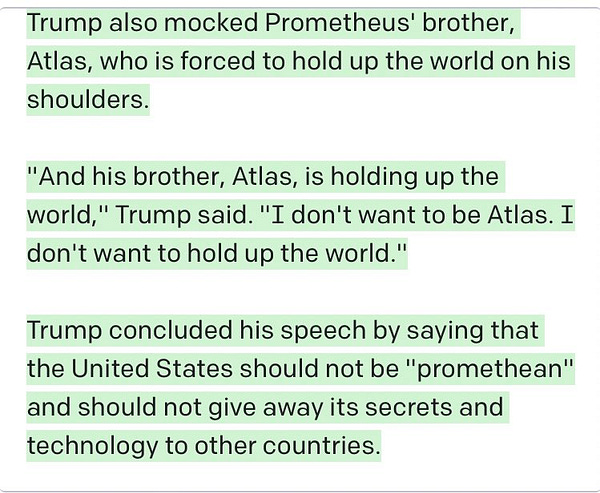

GPT-3 was introduced in 2020, alongside a number of writeups describing its product as “terrifyingly good.” Since then, access has been limited by a wait list, and examples of GPT-3’s potential were only slowly trickling out to the wider public, as in this Guardian column “written” by GPT-3, or on Janelle Shane’s great blog AI weirdness. Since late last year, however, the wait list has been opened, and now anyone can get into the GPT-3 beta and play around, resulting in a recent flood of Twitter posts with screenshots of the GPT-3 Playground’s characteristic green-highlighted text. The astonishing power of GPT-3 has been used to, e.g., create a thread of news articles about former president Donald Trump insulting Greek mythological figures:

Or another news article about President Joe Biden claiming to have met Super Mario, the titular plumber of the Mario Bros. video-game series, in the 1970s:

Prompted by boredom, FOMO, and the need for a subject for this week’s newsletter, I recently signed up for access to the GPT-3 API to play around in its sandbox (you can do the same here). My main conclusion is that I think it could very easily replace 99 percent of activity on Twitter with almost no downgrade in overall quality.

GPT-3 works, in the context of the Playground interface most normal posters are using, by trying to complete whatever text you’ve begun to write. If you write half of a sentence, GPT-3 will complete the sentence with whatever the langauge model believes is most appropriate, based on its huge corpus of text; if you write a full sentence, it will try to determine what should come after that sentence. So you can give it imperative prompts, like

Tell me a story about Tony Soprano joining HezbollahAnd it will “complete” your prompt by telling the story:

Tony Soprano was a tough guy, a real tough guy. He was the kind of guy that you didn't want to mess with. He was also the kind of guy that you didn't want to get on the wrong side of.

So, when Tony Soprano joined Hezbollah, it was a real shock to everyone. Tony had always been a bit of a maverick, but this was something else entirely.

Hezbollah is a militant group that is dedicated to the destruction of Israel. They are responsible for suicide bombings and other terrorist attacks. They are also considered to be a very powerful and well-organized military force.

Tony Soprano joining Hezbollah was a real game changer. It showed that he was serious about his commitment to the destruction of Israel. It also showed that he was willing to use violence to achieve his goals.

Tony Soprano quickly rose through the ranks of Hezbollah. He became one of their most important and respected leaders. He was instrumental in planning and carrying out attacks against Israel.

Under Tony's leadership, Hezbollah became even more powerful and dangerous. They carried out some of the most devastating attacks in their history.

Tony Soprano's story is a cautionary tale. It shows that even a tough guy like Tony can be turned to violence if he is not careful. It is a reminder that we all need to be vigilant against the threat of terrorism.This is a pretty characteristic GPT-3 text, which is to say it reads like what a smart and contemptuous 10th-grader would produce based on the prompt. The prose is flavorless; the plot is dry; the vibe is “bare minimum effort”; there appears to be some text interpellated directly from Wikipedia. It is “funny,” but more or less accidentally, as a product of combining recognizable proper nouns in a surprising context.

GPT-3 works somewhat better when you give it some guidance, and especially when it’s given guidance that allows it to draw from a particularly large and specific corpus of text. Like podcast transcripts, e.g.

Write a transcript of Tony Soprano on the Joe Rogan podcast telling Joe about joining Hezbollah

Joe: So Tony, what made you want to join Hezbollah?

Tony: I was always interested in the Middle East and the conflict there. When I was approached by Hezbollah, it just made sense.

Joe: And what do you do for Hezbollah?

Tony: I'm a soldier. I fight for the cause.

Joe: That's amazing. I had no idea you were involved in that.

Tony: Yeah, it's been a great experience. I've seen a lot of things and met a lot of people.

Joe: I bet. So, what do you think about the current situation in the Middle East?

Tony: It's a powder keg. There's so much tension and hatred. I think it's only a matter of time before it all explodes.

Joe: Yeah, I've been saying that for years. It's just a matter of when, not if.

Tony: Exactly.Not bad, but does this really sound like Tony Soprano and Joe Rogan would, in this conversation, or are we simply bringing to bear our own complex imaginative models? (My feeling is that Rogan sounds basically like Rogan would, but the dialogue given to Tony is generic.) It works better if you give it some primer text to give the AI a little bit of direction. The following example begins with a prompt and a section of a transcript from a recent Trump rally:

Transcript of Donald Trump rally in which Trump goes on a rant about Tony Soprano joining Hezbollah

Donald Trump: (15:12) We came up with a lot of great ones. Crooked Hillary. How crooked is Hillary Clinton? Did you see last week? It was big Thursday. They came out. They got into the law firms, I guess, or somebody. I don’t know. They got into various people that were having to do with the Russia hoax. And they have emails. I can’t believe this is going on. They’re believing this stuff about Russia, Russia, Russia. Let’s keep it going as long as we can. They have emails between the FBI and the law firm. What is it, Baker and something, that are talking about the hoax, the Russia hoax. They’re talking about it being a hoax. They’re laughing at it. They’re saying it’s a hoax. They’re talking about Hillary being guilty as hell. They’re talking about all of this stuff. They’re talking about, “We’ve got to stop this.”And then produces this (full disclosure it took a few tries to get something this good):

Donald Trump: (15:50) Now, I have to say this. I have to say this. Tony Soprano, he’s a good guy. He’s a good guy. But he joined Hezbollah. He joined Hezbollah. Hezbollah is a terrorist organization. They’re killing innocent people. They’re blowing up buildings. They’re doing all sorts of terrible things. And Tony Soprano, he joins them. He joins them. And I’m saying to myself, “Why? Why would a guy like Tony Soprano join a terrorist organization?” And then I realized, you know what? He’s Italian. He’s Italian. And the Italians, they’re not the brightest people in the world. They’re not the brightest people in the world.The more of a given category of text there’s likely to be in GPT-3’s data, the more accurate its imitations of that text. Unsurprisingly, given the reams of transcripts of Trump speeches, tweets, and media appeareances, it can do a great Trump; its rendition of, say, Austen, working off of only six or so novels, is not as good but not atrocious. This prompt, e.g.,

Story about Tony Soprano joining Hezbollah in the style of Jane Austen

IT is a truth universally acknowledged, that a single man in possession of a good fortune must be in want of a wife.

However little known the feelings or views of such a man may be on his first entering a neighbourhood, this truth is so well fixed in the minds of the surrounding families, that he is considered as the rightful property of some one or other of their daughters.

"My dear Mr. Bennet," said his lady to him one day, "have you heard that Tony Soprano joined Hezbollah?"Produces:

Mr. Bennet replied that he had not.

"But it is true," returned she; "for Jane saw him yesterday with her own eyes as she was coming home from a walk with Mr. Bingley."

Mr. Bennet made no answer.

"Do you not want to know what she saw?" cried his wife impatiently.

"You are always anxious for me to join Hezbollah," said he; "if it will give you any pleasure, I will join them."

"It would give me great pleasure," replied she.

"But I do not like the idea of Tony Soprano joining Hezbollah," said Mr. Bennet.

"I am sure I do not know why you should not like it," returned she; "but you always have some objection or other to every thing I propose."

"Have you any other objection," said Mr. Bennet, "to my joining Hezbollah, than that of my dislike of Tony Soprano?"

"No, I think not," replied his wife.

"Then I will join Hezbollah," said Mr. Bennet; "and I am sure I shall be very happy."I’m not sure I quite follow the conversation that the Bennets are having, but the prose and dialogue does sound passably like Austen.

I furnish these examples as a way of trying to explain what I have been thinking about the model after a week of playing around: As I think the texts generally show, GPT-3 is not likely to threaten writers whose work depends on their ability write stylishly, or to synthesize information accurately, or to come up with imaginative connections or unexpected imagery. On the other hand, GPT-3 does threaten writers whose work is responding quickly, legibly, and relatively accurately to prompts. What kinds of writers do I mean by this? Well, for example, the people who write articles keyed to popular search topics — the kind of cruft that comes up when you Google “Jerome Powell feet” or “what channel is the tennis on” — are certainly more expensive to pay than the server space it would take to run a program that prompted GPT-3 to write article copy every time a new trending search emerge. (I think I’m already a few years behind on this; I assume most of the articles you encounter in trending-topic search results are produced by GPT-3 or other language models.)

But those writers aren’t the only ones whose output is mostly generic responses to algorithmic prompts. What playing with GPT-3 reminded me of was less Hal-9000 than the experience of Twitter: pressing a button to see what strange, funny, outlandish thing might be said next. Want to see the strangest, stupidest, most extreme opinion? GPT-3 can fashion one just as bizarre as any anonymous Twitter account. Want to see a funny fake dialogue in which Tony Soprano, Christopher, and Paulie argue about Chronic Lyme Disease? GPT-3’s is probably as good as the one written by the guy in Altoona DSA whose display name is BUILD MORE TRAMS. The tech industry has done an admirable job of conditioning us to read and write like advanced robots. But now the advanced robots are here, and I’m not sure what else we have to offer. Twitter users offer up their own strange, distributed language model en masse, producing and then voting on hundreds of thousands of possible completions for prompts like “She’s a ten, but,” all of them as variously appropriate and confused, funny and confusing, as the responses you’d get out of GPT-3 might be. Why do you even need humans?

This could be very useful for cable new presenters who are required to talk non-stop during a major crisis. It also brings to mind a trend happening over at Audible. It's being flooded with short "books" that are created in response to key word searches by individuals who hire ghost writers, narrators and graphic artists. I'm not saying there's anything wrong with this - just that it makes it harder to sift through all of the offerings to find something written by someone who actually knows something.

"This is a pretty characteristic GPT-3 text, which is to say it reads like what a smart and contemptuous 10th-grader would produce based on the prompt."

As a whole, yeah, but it reads more like a (poorly-done) Times' pieces about such and such being radicalized. I would assume that's part of the text being quasi-plagiarized here.

"The tech industry has done an admirable job of conditioning us to read and write like advanced robots."

The writing advice about polished prose seems, a lot of the time, to result in a lifeless wet mound of pulp: it's readable and conveys the information but it doesn't do all that much. I think that's me agreeing with you. Hard to say, because reading the examples I'm experiencing near-instant turing test collapse. (Maybe because I have fussed with Eliza long ago, and it wasn't much worse quality than this? Or possibly because I have read enough ordinary people chatting over almost four decades that an individual voice underlying 'polished prose' can be picked out or noticeably missing?)

I have seen two types of web prose that looks machine-generated: a) the SEO-jamming link text page, and b) the very generic 'recommendations page' and none of this is quite good enough for that. It's going to be hard to tell when very badly written pages are generated by a human or a machine and I assume some of that is going on. This stuff is nowhere near good enough to pass for human at length. (It's very similar in quality to the faces generated by DALLE-E: the generator can't manage coherence.)

It's not distinct enough for twitter either. Reading this post has convinced me of the opposite conclusion: in exactly the same way that AI driving isn't good enough, this thing is not up to snuff.

(I can think of way it can be used though: to generate conservative/centrist op-ed boilerplate, along with some liberal boilerplate. That is likely because those folks are just rewording press releases and talking point releases. So you could maybe populate part of Town Hall with this stuff. You could also, perhaps, used it as a skeleton generator and then essentially work out why it's wrong on paper while rewriting the skeleton. Maybe.)

elm

articles of this type are basically 'if it bleeds, it leads' stories for the chattering precariat, yeah?